Gemini 3 is Here! Features, Release Date & How to Try (2025)

Lately, scrolling through X (Twitter) and Reddit, I’ve been totally bombarded with Gemini 3 news—one minute it’s “quietly rolling out in beta”, the next people are showing off “god-tier front-end dev examples”, and some are even claiming it officially dropped on October 9. Honestly, it’s enough to make your head spin!

But don’t worry. As someone who’s been keeping a close eye on the AI scene for years, I’ve gone through all the official updates, reliable leaks, and busted rumors. Today, I’m breaking down everything you need to know about Gemini 3—from the latest release news to new features and the most exciting predictions—so you can get the clearest, most trustworthy picture of what’s happening.

Has Gemini 3 Actually Been Released?

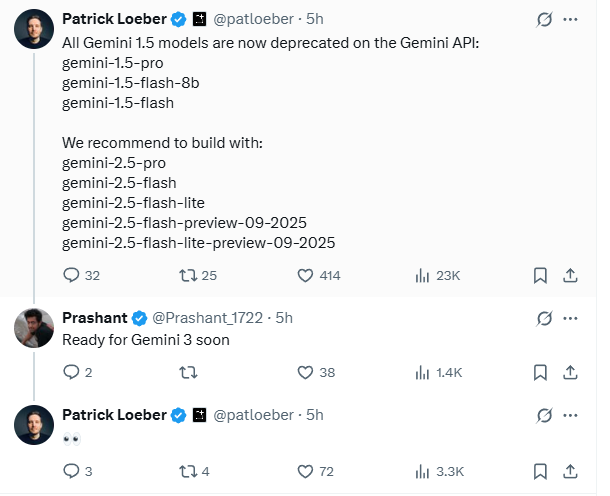

Let’s get straight to the point: as of now (November 2025), Google hasn’t officially released a model codenamed “Gemini 3”. That said, the internet has been buzzing with rumors, and it’s hard to tell what’s real and what’s not. Here’s a breakdown of the hottest recent ones:

“Released on October 9” — Debunked

This rumor blew up on social media in late September, with some people even making countdown graphics. But come October 9, Google didn’t make a peep. Later, trusted outlets like TechCrunch confirmed it was fake news.

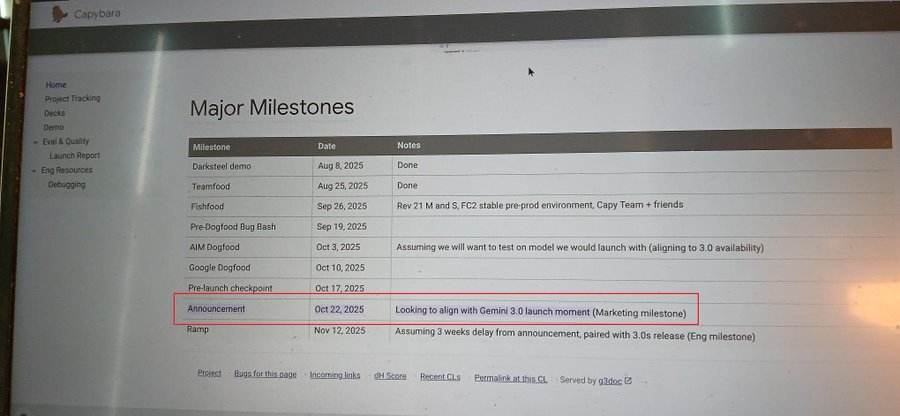

“Leaked internal doc says October 22 release” — Low credibility

Mid-October, a user shared what looked like an internal Google milestone document mentioning October 22 as a release date. The problem? No official stamps, no signatures, and Google never commented. October 22 has passed, and another date in the doc—November 12—still lacks official confirmation. Although Google CEO Sundar Pichai did confirm a 2025 release, the authenticity of this leak remains questionable.

“Beta version leaked” — Partially true

Some developers on X have shared screenshots of Gemini 3 beta, like web pages with black hole visualizations black hole visualizations or SVG vector graphics. But these appear to be from invite-only internal tests, so regular users don’t have access yet.

Nov 15 – The shadow-launch signal drops

A post on Reddit claiming that Gemini 3’s internal test version was already live completely blew up. Some developers even said they could access Gemini 3 through the mobile app. Google kept playing mysterious with zero official announcements, but the real-world tests were wild — coding speed jumped by about 30%, reasoning felt way smoother, and the multimodal understanding? Basically “third eye open” levels.

Meanwhile, Google’s CEO dropped a single “thinking face” emoji on social media , which the community instantly decoded as: “Yeah, I’m ready.”

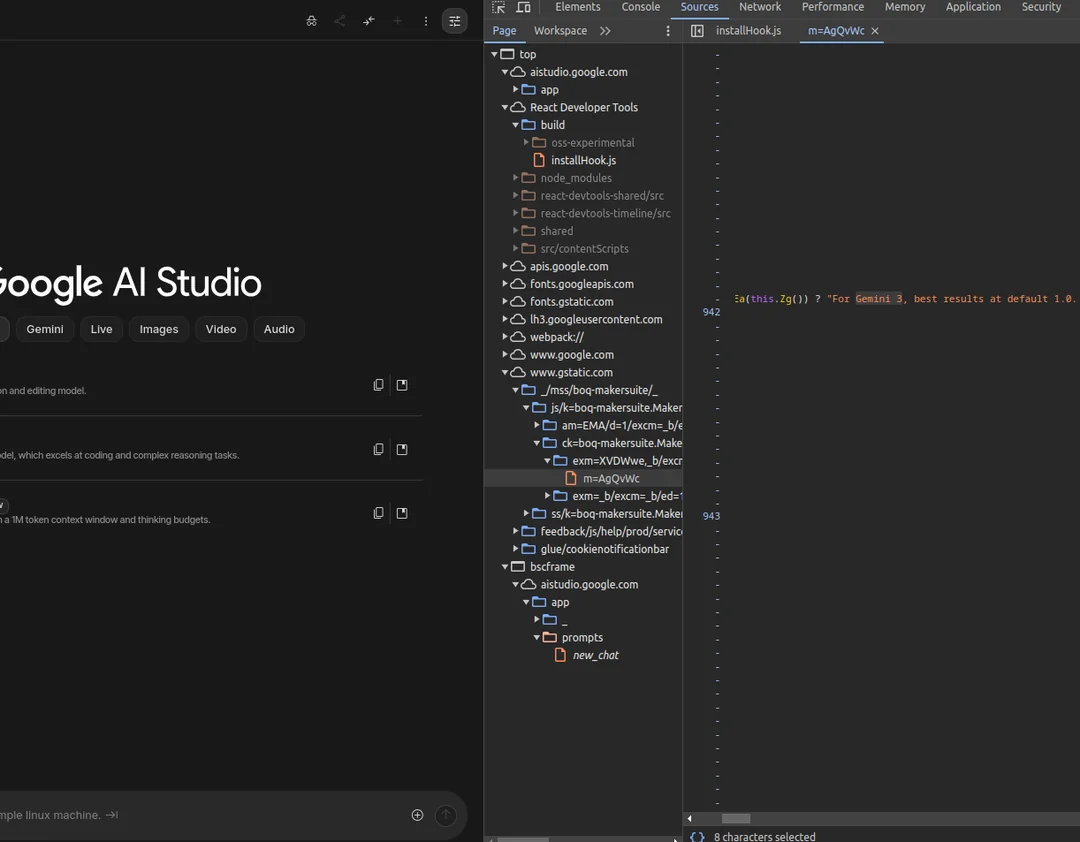

Nov 17 – The biggest leak yet

This was the day the smoking gun appeared. Inside Google AI Studio, a sharp-eyed dev spotted a hidden identifier: “gemini-3-pro-preview-11-2025.”

What does that tell us? Simple — Gemini 3 is already running internally. Google just hasn’t hit the big red “announce” button yet, letting a small circle test-drive it first. The whole community agrees: this is the strongest pre-launch signal so far.

Nov 18 – The day everyone quietly waited for Gemini 3

Even without an official announcement, Nov 18 basically turned into an unofficial Gemini 3 holiday. Developers noticed old models starting to migrate. Social media was full of “pre-launch night” hype. The vibe was unmistakable: something big is coming.

From vague “by the end of the year” promises to this very concrete, momentum-packed date, Google’s pace suddenly shifted from “slow float” to “full sprint.”

When Will Gemini 3 Actually Release?

Latest Official News on Gemini 3

Even though Google hasn’t formally announced “Gemini 3,” it’s clear they’re working on the next-gen AI, and the CEO has already confirmed it! Here’s the latest on the upcoming release and its key features:

November 2025 | CEO Confirmation

Sundar Pichai mentioned during the earnings call that the next-gen model (what everyone assumes is Gemini 3) will definitely release this year. Honestly, this is the only official signal you can really trust—way better than any random rumor floating online.

Recent | Development Warm-Up

The Gemini API docs have quietly been updated, plus Google rolled out the Gemma 3 mini-model and Imagen 3 image model. Think of these as small warm-ups before the main release—it’s obvious the team is gearing up fast.

Industry Rumors | Performance Leaks

Tech media insiders hint that the new model will be faster, cheaper, and stronger at hardcore features like coding, reasoning, and multi-step tasks. Basically: “faster, smarter, and still affordable.”

Bottom line: Most claims that Gemini 3 has already dropped are just speculation. But with the CEO confirming a 2025 release, it’s coming soon. Google is clearly holding back a big reveal, so we’ll just have to be patient.

Predicted Release Timeline for Gemini 3

From watching Google AI launches for years, there’s a clear rhythm: big models either get a teaser at year-end or drop at the next year’s Google I/O. Based on that, here’s what to watch:

Earliest (Year-End)

Since the CEO said “this year,” Google could show a first release preview or highlight a few new features around late 2025 (maybe December) via a blog post or small online event.

Most Likely (Next Spring)

Google I/O usually happens in May. Historically, full releases and deep dives for major AI products happen there.

So realistically, between December 2025 and January 2026, we might see Google tease Gemini 3’s core features. For regular users to get full access—and for developers to really test its features—the 2026 Google I/O release is probably the most likely. That’s usually when Google drops the big guns.

Gemini 3 New Features: Official Info + Hands-On Highlights

First things first: Google hasn’t officially published a full feature list for the Gemini 3 release yet. But from the CEO’s earnings call, API doc updates, and beta leaks, we can piece together the core upgrades. Based on my years following the AI scene, I’ll split this into official confirmations and reliable predictions, then give a side-by-side comparison with GPT-5 and Claude 4.5.

Officially Leaked Core Upgrades

I’ve sat through multiple public talks by Google execs, and Pichai & team have already “spoiled” most of Gemini 3’s breakthroughs. Some of the tech jargon sounds tricky, but here’s the simple version:

Intelligent Agents: Like hiring an AI assistant

Pichai specifically mentioned that the next-gen model can handle complex tasks on its own. Looking at the Gemini API docs, there’s a new multi-step task planning interface. My guess: it’ll act like a real assistant—you say, “Make a product launch PPT,” and it automatically breaks it down: find materials → write copy → design slides → export. No micromanaging every step, huge time saver.

Logical Reasoning: From “reading scripts” to actually thinking

Google’s leadership emphasized the new model is “smarter,” focusing on reasoning and multi-step tasks. Simply put: less nonsense, more reliable. It can trace complex command chains, debug code step by step, and analyze business data without mixing things up—truly moving from “information mover” to “problem solver.”

Performance & Cost: Fast, cheap, even mobile-friendly

Using new hardware like TPU v6 means faster and cheaper. Latency drops, answers come almost instantly, and costs go down. Even budget phones should run Gemini 3 smoothly—no need to shell out for high-end devices just to use it.

Multi-Modal Integration: Seamless image, audio, video

With Imagen 3 (40% better image accuracy) and Gemma 3 (3× faster response) as the tech base, the experience is impressive. For example, give it a 10-second video—it can recognize clothes, generate 5 T-shirt designs with fun captions, all in real-time. Multi-modal creation efficiency doubles.

Beta Leak Highlights

The Gemini 3 beta features have been buzzing in the AI community. Screenshots and case studies from Reddit and X (Twitter) show insider or test-user insights. I cross-checked multiple sources, and there’s a lot of overlap. Keep in mind, these are leaks—Google could tweak features anytime.

Long Context Window: Handle “millions of tokens” without forgetting

It can process millions to tens of millions of tokens at once, equivalent to several long novels or entire codebases. Ideal for theses or large dev projects—no need to feed data in chunks. It remembers everything, so your code changes won’t forget previous instructions.

Native Multi-Modal: Understand plot and humor, not just describe images

Strengthened cross-modal understanding: it can handle images, audio, even video. It might “get the joke in a movie clip” or “draw a scene matching background music”—not just give a rough description.

Special Performance Optimizations: SVG/code generation, less “flattery”

It can generate animated SVGs and turn sketches into interactive web prototypes. One developer drew a shopping cart, and Gemini 3 generated full code with animations, popups, and compatibility comments in minutes.

Architectural Upgrade: MoE Expert Mixture Model

Behind the scenes, it uses a dynamic “trillion-parameter” mixture-of-experts model. Only part of the model activates per query, keeping power high but speed fast. Think of it as a super expert team: simple questions handled by “fast experts,” complex tasks tackled by the whole team.

Nano Banana 2: Instantly Your Pocket AI Photo Editor

Reddit users spotted traces of Nano Banana 2 in Gemini’s website code — looks like it’s gearing up to launch alongside Gemini 3! This update seems to turn Nano Banana 2 into a true creative assistant in your pocket: drop it an image, and it handles all the visual magic for you. Expect finer image editing, smoother multi-image blending, and generation that actually gets your style.

Whether it’s cover photo optimization, cross-platform content packaging, photo retouching, AI portrait generation, or those last-minute “need this done yesterday” shots — it’s ready to go, no learning curve required.

I’ve been using the free version of Nano Banana with my studio colleagues for a while — perfect for everyday e-commerce images or social media posts. Just a few clicks and you get polished results, plus a chance to experiment with fun creative ideas. You really don’t need to wait for Nano Banana 2 to drop — the free version already covers most editing needs. Start boosting your workflow now, and when Nano Banana 2 lands, everything will feel even smoother.

Gemini 3 vs GPT-5 / Claude 4.5: Practical Use Cases

Gemini 3 is shaking up the AI space. Developers and enterprises are asking, “Should we pause GPT-5 subscriptions for Gemini 3 release?” or “Can it replace Claude for annual report analysis?” People compare it with GPT-5 and Claude 4.5 on parameters and practical scenarios.

| Feature / Scenario | Gemini 3 (Beta/Leaked) | GPT-5 | Claude 4.5 (Speculated) |

|---|---|---|---|

| Programming | Front-end powerhouse: sketch → code, SVG animation; back-end moderate | Full-stack strong, excels in complex algorithms; front-end less creative | Good for document-style code (Excel, SQL); complex tasks need step-by-step prompts |

| Multi-Modal | Full video/audio/image chain; Imagen 3 enhances image gen; audio emotion matched | Top video understanding; weak audio; images single-style | Strong long-text + multi-modal (e.g., 1000-page PDFs), images average |

| Intelligent Agent | Multi-step tasks autonomously, supports project planning (PPT, events) | Real-time info aggregation; task breakdown needs explicit instructions | Long-flow task tracking (cross-month projects); slower real-time response |

| Cost & Speed | 2–3× faster, 50% lower inference cost (official leak) | Medium speed, higher API cost (~$0.03/1k tokens) | Slower, but long-context cost ~30% lower than GPT-5 |

Scenario Examples:

- Front-End Dev (Sketch → Interactive Web)

- Gemini 3: nails it! Hand-drawn cart → full code with animation & compatibility comments, done in 3 min.

- GPT-5: needs extra instruction for animation; manual tweaks required.

- Claude 4.5: focuses on static structure; complex interactions need stepwise guidance.

- Long Document Analysis (500-page PDF + charts)

- Claude 4.5: excels, precise cross-page data extraction.

- Gemini 3: handles long text easily; chart analysis slightly weaker.

- GPT-5: faster than Claude, but misses details in docs >300 pages.

- Real-Time Info Aggregation (Stock + News)

- GPT-5: fast real-time data capture, clear summaries.

- Gemini 3: moderate speed, richer analysis dimensions.

- Claude 4.5: slow real-time, better for long-term trend analysis.

- Multi-Modal Creation (Video → Images + Copy)

- Gemini 3: precise video content recognition, diverse image styles, high emotional match in copy.

- GPT-5: high video understanding, single-style images.

- Claude 4.5: excellent copywriting, image gen average.

Summary: Gemini 3 excels at creative work and front-end dev; GPT-5 shines in real-time info and full-stack programming; Claude 4.5 is best for deep long-text analysis. Pick the right tool for your needs.

Gemini 3 Hands-On Reviews: Early Beta Features

I haven’t gotten my own hands on the Gemini 3 release yet, but scrolling through X and Reddit, the beta users’ shared experiences have already got me drooling! These are all real screenshots and videos—super authentic. Here are a few features and highlights that really stood out:

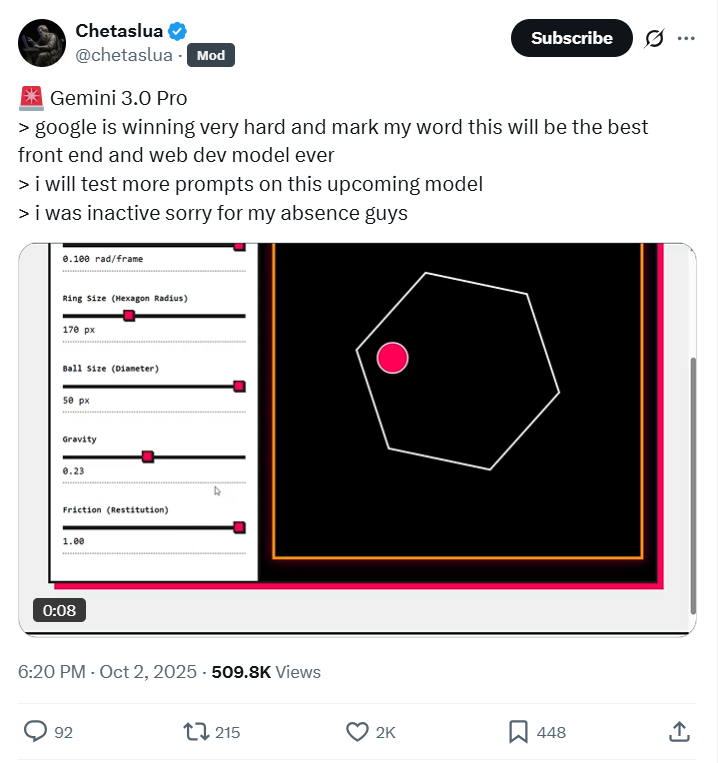

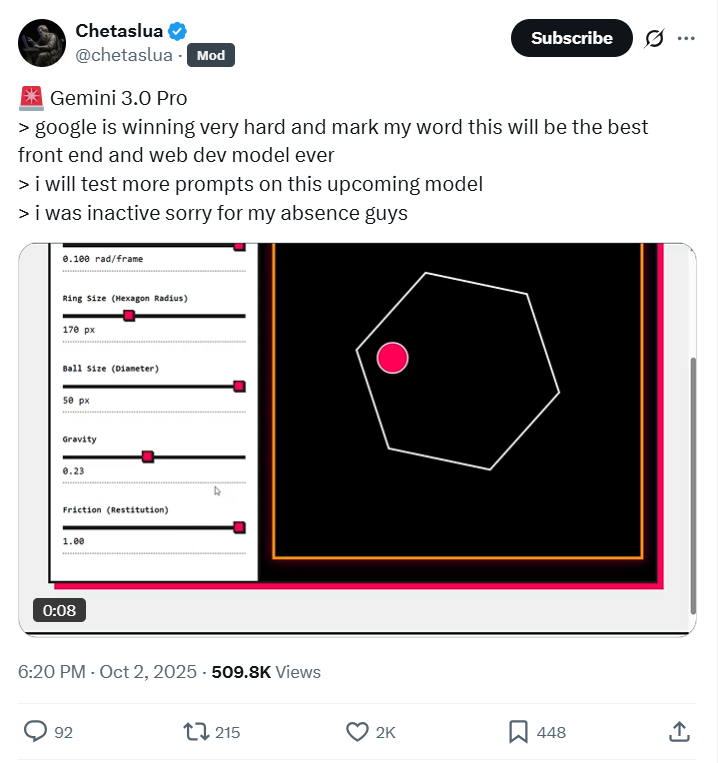

SVG Animations: A Front-End “Shortcut Tool”

Take SVG vector graphics, for example. You can casually say,

“Make a rotating planet icon with the orbit moving along with it,”

and it spits out copy-paste-ready code. Animations, timing, transitions—all perfectly tuned. AI blogger @chetaslua shared a demo and was blown away:

“Google is winning very hard and mark my word this will be the best front end and web dev model ever.”

Watching the video, the way Gemini 3 combines multi-modal understanding with code generation is just silky smooth—definitely one of the standout features of this early release.

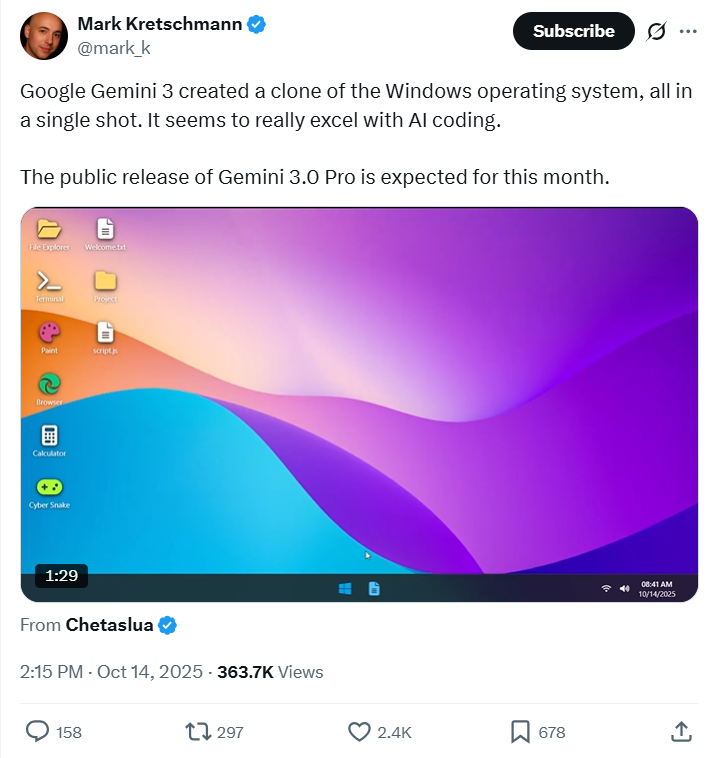

“Code Operating System”: Build Complex Frameworks in One Prompt

Even crazier: with a single prompt, Gemini 3 can generate full operating system frameworks or complex SaaS app code—front-end UI, back-end logic, even command-line interfaces. @chetaslua shared a jaw-dropping case:

“Google Gemini 3 created a clone of the Windows operating system, all in a single shot. It seems to really excel with AI coding.”

This showcases another amazing feature in the beta release—building complex systems in just one shot.

Massive Document Analysis: Handling 150,000 Tokens

Reddit users also shared their experiences. One post in r/singularity, “Gemini 3.0 Pro spotted”, mentioned trying out 150,000-token ultra-long conversations in AI Studio. While there were occasional hallucinations, the efficiency was impressive—showing that this release handles long-context tasks like huge documents or extended dialogues with ease. This long-context handling is another killer feature that sets Gemini 3 apart.

Overall Impressions: Across the board, beta users are raving about Gemini 3’s speed and ability to bring creative ideas to life, especially for front-end and design work. Almost no negative feedback. Honestly, seeing this makes me even more eager to try it myself!

How to Experience Gemini 3

Recently, I saw a demo from a Gemini 3 beta user in a developer group—turning a hand-drawn sketch into an interactive web page. I was immediately sold! You just draw a quick wireframe, and it spits out animated code. The efficiency is insane. Naturally, many friends have been asking me: “When can we use it?” or “How do we get beta access?”

Based on Google’s past product release patterns, I’ve put together a step-by-step guide. Once Gemini 3 officially drops, you’ll know exactly how to jump in.

Official Gemini 3 Access

Currently, Google hasn’t opened public access yet, but from my experience with Gemini 2.5, their usual pattern is: first, they release API access in Google AI Studio for developers, then a few weeks later, new features appear in the Gemini App and search. Most likely, Gemini 3 will follow the same path.

| Channel | How to Experience | Cost / Price | Personal Recommendation |

|---|---|---|---|

| gemini.google.com | Upgrade Gemini (free & paid versions) | Based on Gemini 2.5: basicfeaturesfree (limited), advancedfeaturesbundled in Google One AI Premium (~$19.99/month) | Most reliable, fullfeatures. Recommended for main users to get a membership. |

| Developer API | Use Gemini API to access the new model | Token-based pricing; usually higher than previous versions at launch | Suitable for developers or tech users needing deep AI integration. |

Gemini 3 Beta: How to Be Among the First

I tried applying for the Gemini 2.5 beta before—and made the rookie mistake of just submitting a basic info form. Nothing happened. Google keeps these high-profile releases super tight, usually following Beta or Preview flows. Based on my experience and friends in the AI circle, there are several ways people have actually gained early access:

| Beta Type | How to Join | Best For |

|---|---|---|

| Official Early Access | Apply via Google AI Blog / Developer Channel, look for “Early Access Program” or “Trusted Tester” links | Top developers, large enterprise users, long-term AI research partners |

| Gemini Advanced Subscribers | Paid subscription: existing Gemini Advanced members often get priority in A/B testing or limited previews | Individuals seeking top performance |

| Active Community Contributors | Participate in forums, Reddit (e.g., r/GeminiAI), give feedback on bugs/features; Google may invite top contributors | Enthusiastic AI users, deep product understanding |

| Academic Research Collaboration | Submit requests via Google’s academic programs if doing LLM/multi-modal research | Researchers, university students/faculty |

Bottom line: For regular users, the easiest way to beta access is to subscribe to Gemini Advanced—you’ll usually get to test the flagship features before free users. Developers need to keep an eye on Google AI Studio announcements.

Cost & How to Save on Membership

From my experience buying Gemini 2.5, Google usually sticks to “basic features free + advanced features paid.” Currently, Gemini 2.5 advanced features are in Google One AI Premium, $19.99/month. With Gemini 3 upgrades (multi-modal, intelligent agents, etc.), the price might rise slightly—maybe $22.99/month. But basic stuff like research or copywriting will still be free for everyday users.

To fully unlock Gemini 3, a membership is key—multi-modal creation, long-document analysis, code generation: all efficiency boosters rely on the paid tier.

Practical tips:

- Official Channel: Buy Google AI Pro membership via the Gemini site . In the U.S., $19.99/month. Includes use in Gmail, Docs, 2TB cloud storage, access to strong models (like Gemini 2.5 Pro), and 1M-token context windows. Pros: fastest updates, stable service, easy support. Cons: no discounts, one account per user.

- Third-Party Platform:I later discovered FamilyPro, a third-party account-sharing platform, and honestly, the price and service blew me away! Last month, I helped my brother and sister-in-law grab Gemini 2.5 AI Pro through FamilyPro. Officially, it costs $19.99/month, but FamilyPro sold it for just $5.99—talk about value for money. My brother said it works exactly like the official account, with all updates syncing in real time. One time, he locked his account after entering the wrong password. He contacted FamilyPro’s 24/7 support, sent proof of purchase, and they unlocked it in just three minutes—way faster than waiting half an hour for official support. Plus, FamilyPro offers a 7-day warranty. I even tested the features for three days after buying to make sure everything worked before sharing it with him. That kind of guarantee makes using it super reassuring.

Summary:

- If you want first access and maximum reliability, go official.

- If you want to save some cash and still get full features, FamilyPro is the clear choice. Bookmark it now, and once Gemini 3 releases, you can jump straight in—no scrambling for links.

FAQ

Q1: When will Gemini 3 be released?

A: Google hasn’t shared an exact date yet. The CEO has confirmed that it’s in development. Most industry watchers expect a developer preview around late 2025 or early 2026, with a broader release shortly after.

Q2: What are Gemini 3’s core new features?

A: The biggest breakthroughs are deep reasoning and advanced multi-modal integration. Rumored features include handling millions of tokens in long-context memory and generating high-fidelity code and UI—exactly the kind of upgrades that make this release exciting.

Q3: How much stronger is Gemini 3 compared to ChatGPT-5 or Claude 4.5?

A: Unconfirmed benchmark leaks suggest Gemini 3 dominates in code generation, UI fidelity, and complex reasoning. Officially, Google aims to maintain a lead in multi-modal features and long-context handling.

Q4: How can regular users get early access to Gemini 3?

A: The main channel is Google AI Studio. Some users can try it early via A/B testing interfaces. Full access usually requires an AI Pro or AI Ultra membership, with certain features gated behind paid tiers.

Q5: Is Gemini 3 free to use?

A: Some features are free—usually limited usage in Google AI Studio. But to fully explore the model, including long-context memory, multi-modal features, and advanced tools, you’ll need an AI Pro or AI Ultra subscription.

Conclusion: Why Gemini 3 Really Matters

When following Gemini 3, I’m honestly less concerned about the exact release date and more curious about whether it can truly turn AI from a “helper hand” into something that can handle tasks independently. Based on my years of keeping an eye on the AI space and my own experience using AI, here’s the practical take: knowing what it can actually solve matters far more than just waiting for the release.

Key Info at a Glance

- Launch Timing: Definitely this year. We might see a technical preview as early as December 2025, but to get the full version, the most realistic release window is Google I/O 2026.

- Core Features: Officially, intelligent agents and upgraded reasoning are the big draws. Beta testers rave about front-end features that turn sketches into code and the ability to process millions of tokens without “forgetting”—these alone save a ton of time.

- Pricing Tip: Still “basic free + advanced paid.” Want to save money? Keep an eye on FamilyPro—much cheaper than official channels and great value for full features access.

What Changes Could Gemini 3 Bring?

The real significance of Gemini 3 is making AI go from a chatty assistant to a professional-grade outsource tool:

- For regular users: No need to learn coding or design to create. Sketch a wireframe, turn a clip into copy—one prompt does it all. You focus on the idea, not the execution.

- For front-end developers: Gemini 3 can handle 70% of repetitive tasks, like basic UI coding and recreating design mockups, letting devs focus on core creativity.

- For the industry: Gemini 3 isn’t trying to be “everything for everyone.” By specializing in front-end and multi-modal tasks, it pushes competitors to upgrade, which ultimately benefits us, the users.

Even though it hasn’t officially been released yet, the beta examples are enough to get anyone hyped. Once it’s live, I’ll test it firsthand—make sure to check back for my full report on the features in action!