ChatGPT Pros and Cons (2025): GPT-5 Features, Real Gains vs. Hype, and Pricing Compared (O3, GPT-4.5, Copilot, Gemini, Claude)

GPT-5 did move the needle on reliability, long-context retrieval, and unified routing—but it’s not AGI, it doesn’t run fully offline, and cost/context trade-offs still matter. If you’re deciding between O3, GPT-4.5, or GPT-5—and between ChatGPT vs. Copilot/Gemini/Claude—this guide gives practical, citation-backed answers.

1. Context: What OpenAI Actually Shipped with GPT-5 (and Why)

When Sam Altman talked publicly about GPT-5, one theme kept repeating: reliability, especially reducing hallucinations. That framing matters: instead of flashy, speculative features, OpenAI signaled “get the basics right” for professional work. Tweet by Sam Altman

What we got at launch aligns with that message:

A unified system that stops asking you to hand-pick “which model” (GPT-4, Turbo, o-series, etc.). GPT-5 in ChatGPT is presented as a router-backed “super system” that automatically switches between fast and deep-thinking modes. OpenAI’s developer materials describe GPT-5 as a coordinated system of reasoning-models plus router logic; the public API now exposes gpt-5, gpt-5-mini, and gpt-5-nano options for performance/cost trade-offs. OpenAI GPT-5 for developers

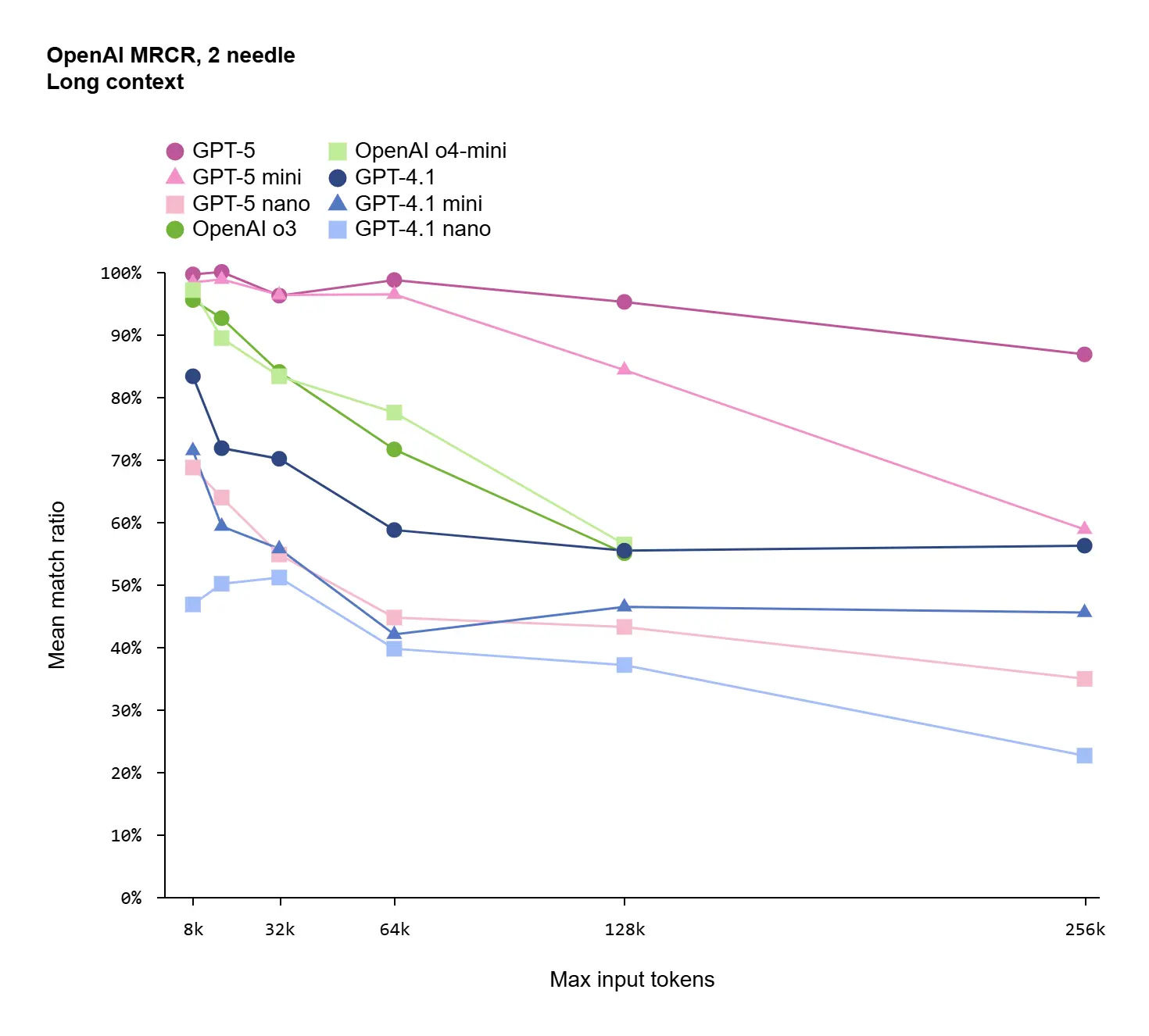

Long-context gains with measurable retrieval improvements compared to O3 and GPT-4.1, plus an API offering up to 400K combined tokens (input+output) across GPT-5 variants. OpenAI GPT-5 product page

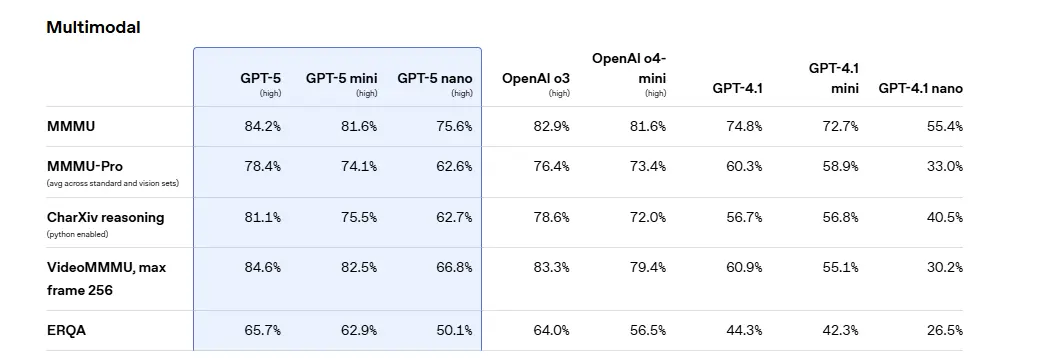

Multimodal by default (text + vision, audio workflows integrated), plus improvements in the Responses API for tooling and agentic flows. OpenAI Responses API update

At the same time, some things didn’t ship (or remain true):

No AGI or "subjective intent." GPT-5 remains a powerful statistical model without consciousness or self-motivation. OpenAI positions it as better at long-context and tool use, but not autonomous reasoning.

No fully local, offline GPT-5. Access remains via the cloud—either ChatGPT interface or API. Developers seeking on-prem solutions must still rely on open-source or hybrid workflows.

Why this strategy?

Altman’s focus on reliability suggests OpenAI prioritized trustworthiness over hype. The routing architecture balances performance and output quality, managing latency and hallucinations in one unified experience.

2. ChatGPT Pros and Cons—Framed for Real-World Work

Pros

Unified “just ask” experience

No juggling between O3, 4.5, or 5. GPT-5 automatically picks the most appropriate internal mode — saving mental load. OpenAI developer announcementFewer hallucinations, stronger reasoning discipline

Benchmarks show GPT-5 outperforms O3 and GPT-4.1 in long-context reasoning tasks. GPT-5 for developersBroader long-context workflows

Up to 400K tokens enables book-length summarization, codebase analysis, and research compilation in one session. GPT-5 product pageMultimodal as table stakes

Support for text, vision, and audio is integrated; enhanced API features support richer agent behaviors. Responses APIClear developer SKUs

Ranging fromgpt-5togpt-5-nano, giving clear signals on trade-offs between intelligence, latency, and price. GPT-5 for developers

Cons

Not AGI; still “pattern-based”

GPT-5 doesn’t have goals or self-awareness; improved output comes from better modeling of text patterns.Latency in deep mode

While accurate, deeper reasoning paths may run slower than O3’s fast responses.Cloud-only access

No offline usage; on-prem solutions require alternative models.Pricing and plan friction

API billing vs Chat subscription needs thought; some teams consider platforms like FamilyPro.io for cost-effectiveness (see section 9).

3. GPT-5 vs O3 vs GPT-4.1: “Which Is Better — O3 or 4.5 ChatGPT?”

| Dimension | O3 | GPT-4.1 | GPT-5 |

|---|---|---|---|

| Core design | Reasoning-first with “think longer” behavior | General-purpose GPT with better instruction-following and context | Unified router, auto fast vs deep thinking |

| Speed (feel) | Fastest on many short tasks | Medium | Fast in quick mode; slower in deep mode |

| Reasoning depth | High | Improved vs GPT-4.0 | Best overall, plus routing |

| Context handling | Varies | Better long context | Top — up to 400K tokens |

| Multimodal | Limited | Present in API | Native and robust |

| When to choose | Speed + reasoning | General tasks | Mixed workloads, deep analysis, long docs |

O3 was introduced as a reasoning-first model that “thinks longer” on qualitative tasks.

GPT-4.1 improved instruction-following and extended context capabilities.

GPT-5 offers the best of both worlds via routing and long-token support.

Bottom line: If you're choosing in 2025, GPT-5 is the practical go-to—no version juggling and strong long-context capability.

4. What Shipped vs. What Got Cut (Reading the Tea Leaves)

Shipped (confirmed): reliability improvements, long-context retrieval, unified routing, developer SKUs, multimodal support.

Not shipped (yet):

- Full autonomy or self-directed agents (no “self-driving AI”).

- Offline GPT-5 deployment.

Why? OpenAI emphasized practicality and trust—news coverage during the launch also noted reliability bumps.

5. ChatGPT vs Copilot vs Gemini vs Claude (Updated 2025)

| Capability | ChatGPT-5 | Microsoft Copilot | Google Gemini | Anthropic Claude |

|---|---|---|---|---|

| Breadth | ★★★★★ unified router + tools | ★★★★ deep M365 tie-ins | ★★★★★ native multimodal | ★★★★★ conservative long-form |

| Coding | ★★★★ | ★★★★★ (IDE integration) | ★★★★ | ★★★★ |

| Context window | Up to 400K tokens | Varies by product | 1M tokens available | 200K standard |

| Tooling & agents | Mature API & Responses | Copilot Studio & connectors | Vertex AI + RAG tooling | Fewer integrations |

| Docs | GPT-5 API/pricing | Microsoft docs | Gemini API info | Claude documentation |

References: OpenAI dev docs, Microsoft Copilot docs, Google Gemini API docs, Anthropic model specs.

Takeaways:

- Copilot is best for Microsoft 365 workflows.

- Gemini suits ultra-long context RAG apps.

- Claude excels at conservative long-form writing.

- ChatGPT-5 offers broad balance, routing, strong ecosystem.

6. Running ChatGPT Locally — What’s Realistic?

Short answer: You can’t run GPT-5 offline—the model weights aren’t public. Access remains cloud-only.

However, for air-gapped or self-hosted needs, here’s what you can do:

6.1. Why GPT-5 Isn’t Local

- Closed weights — not published.

- Extreme hardware needs — expect 100B+ models needing multi-node GPUs.

- Cloud strategy — OpenAI updates and safety filters run server-side.

6.2. Viable Local Alternatives

| Model | Params | License | Highlights |

|---|---|---|---|

| GPT-OSS 20B Base | 20.9B | MIT | LoRA-tuned GPT-OSS variant; local download/run possible |

| LLaMA 3 | 8B / 70B | Community | Strong open-source ecosystem |

| Mistral / Mixtral | 7B / MoE | Apache 2.0 | Efficient, multilingual |

| Qwen | 7B / 72B | Apache 2.0 | Chinese/multilingual strong |

| Yi | 6B / 34B | Apache 2.0 | Long context optimized |

Example: gpt-oss-20b-base is a local model (MIT licensed) suitable for testing—far from GPT-5 quality but deployable.

6.3. Local Model Deployment Cheat Sheet

| Model | Params | FP16 VRAM | INT4 VRAM | Speed* | Hardware | Est. Cost (CapEx) |

|---|---|---|---|---|---|---|

| GPT-OSS 20B | 20.9B | ~42 GB | ~14 GB | ~7–9 tok/s | 2× RTX 3090 / 4090 or 1× A100 40GB | ~$3–5K |

| LLaMA 3 8B | 8B | ~16 GB | ~6 GB | ~15–20 tok/s | RTX 3090/4090 | ~$1.5–2K |

| LLaMA 3 70B | 70B | ~140 GB | ~40 GB | ~3–4 tok/s | 4× A100/H100 | ~$40–60K |

| Mixtral 8×22B | Active 2×22B | ~88 GB | ~30 GB | ~6–8 tok/s | 2× A100 80GB | ~$25–35K |

| Qwen 72B | 72B | ~140 GB | ~40 GB | ~3 tok/s | 4× A100/H100 | ~$40–60K |

*Speeds are approximate and depend on quantization and batch size.

6.4. Deployment Patterns

- Local + API Hybrid: Store sensitive data locally; delegate complex reasoning to GPT-5 via API with redaction.

- Local Inference for Volume, Cloud for Quality: Run open models for drafts/filters; escalate only key tasks.

- On-Prem Cloud: Certain vendors offer isolated cloud deployments (not fully offline).

6.5. When Local Makes Sense

- Regulation forbids cloud data.

- You need ultra-low latency.

- You process large volumes (cost control).

- Your design mandates total privacy.

7. Pricing: OpenAI vs API vs FamilyPro.io

7.1 Chat Plans (End Users)

| Plan | Monthly Price | Notes |

|---|---|---|

| ChatGPT Plus | $20 | Priority access, higher limits |

| ChatGPT Pro | $200 | Designed for high usage and early feature access |

| FamilyPro.io | $5.5 | Same With ChatGPT Plus,offers shared GPT-5 access at lower cost—for individuals or small teams not needing enterprise controls. |

7.2 API (Usage-Based)

| Model | Input $/1M | Output $/1M | Context |

|---|---|---|---|

| GPT-5 | $1.25 | $10.00 | Up to 400K |

| GPT-5 mini | $0.25 | $2.00 | Up to 400K |

| GPT-5 nano | $0.05 | $0.40 | Up to 400K |

API is often more cost-effective for heavy use than chat subscriptions.

8. Practical Playbooks

For Developers:

- Short patches = O3 style.

- Debug + redesign = GPT-5 deep reasoning.

- Agent workflows = Use Responses API plus GPT-5.

For Researchers & Writers:

- Long interviews & literature reviews = GPT-5 with structured retrieval.

- Massive context = Test Gemini variants (1M tokens).

For Biz Teams:

- Microsoft ecosystem = Copilot.

- Long-form conservative output = Claude (200K context).

- Broad “assistant of everything” = ChatGPT-5.

9. FAQ

What are the pros and cons of ChatGPT?

Pros: unified routing, strong long-context retrieval, better reliability, mature tools.

Cons: not AGI, deep-mode latency, cloud-only, usage-based pricing.

Which is better, O3 or 4.5 ChatGPT?

For immediate responses, O3 wins. For general use, GPT-4.1 is stable. For ultimate flexibility, GPT-5 is the default winner.

Can I run ChatGPT locally?

No. GPT-5 is not local. Consider LLaMA/Mistral for local inference or hybrid architecture.

What’s new in ChatGPT 4.1?

Better code generation, instruction-following, and longer API context support compared to GPT-4.

ChatGPT vs Copilot—what should I use?

Need Microsoft 365 integration? Go Copilot. For general assistant + broad tools, go GPT-5.

ChatGPT vs Gemini vs Claude—who’s best?

Gemini excels at ultra-long context (1M). Claude is long-form safe (200K). ChatGPT-5 balances both with agent ecosystem.

How long can a ChatGPT conversation be?

GPT-5 API allows up to ~400K tokens; Chat limits depend on subscription tier—check official notes.

10. Final Recommendations

- Individuals & creators: Use ChatGPT-5; for cost savings, evaluate FamilyPro.io (check terms).

- Microsoft-centric orgs: Use Copilot for M365 workflows, GPT-5 API for agents.

- Ultra-long context apps: Consider Gemini or pipeline-based chunking.

- Policy-sensitive summarization: Claude offers conservative output with 200K context.

- Zero-cloud requirements: Use LLaMA/Mistral on-prem and call GPT-5 only when permitted.

Try FamilyPro.io today — discounted GPT-5 access for individuals and teams. No enterprise lock-in, great value.